LightEval — Test any LLM against any Benchmark with 1 CLI Command

The HuggingFace native framework for benchmarking LLMs en masse

Large language models (LLMs) are changing the way we interact with computers. But with over 100s of open-source LLMs and 1000s of datasets to benchmark them on, evaluation is getting out of hand.

You can find all available benchmarks here.

That is why an internal tool called LightEval had been created — to evaluate any LLM model across 1000s of datasets with 1 CLI command.

Here’s What You’ll Learn in This Article:

How LightEval works Under-the-Hood

How You Can Benchmark any LLM in Python

How to Build Apps on Top of LightEval

What is LightEval?

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron.

Evaluating LLMs is crucial for understanding their capabilities and limitations, yet it poses significant challenges due to their complex and opaque nature. LightEval facilitates this evaluation process by enabling LLMs to be assessed on acamedic benchmarks like MMLU or IFEval, providing a structured approach to gauge their performance across diverse tasks.

How does it work under the hood?

The lighteval library works in following 5 steps:

Configuration (Create model and task configuration):

This step creates the configuration for the model and tasks.

It includes loading the model from the Hugging Face Hub using the provided configurations.

It also loads the task information from the registry.

2. Data Preparation (Load documents and requests):

This step creates the data for the evaluation.

It includes loading documents and creating requests based on the tasks.

It uses the loaded model to create few-shot examples if required.

3. Seeding (Set random seeds):

This step sets the random seed for reproducibility.

It sets the seed for both python’s random module and numpy for consistent results.

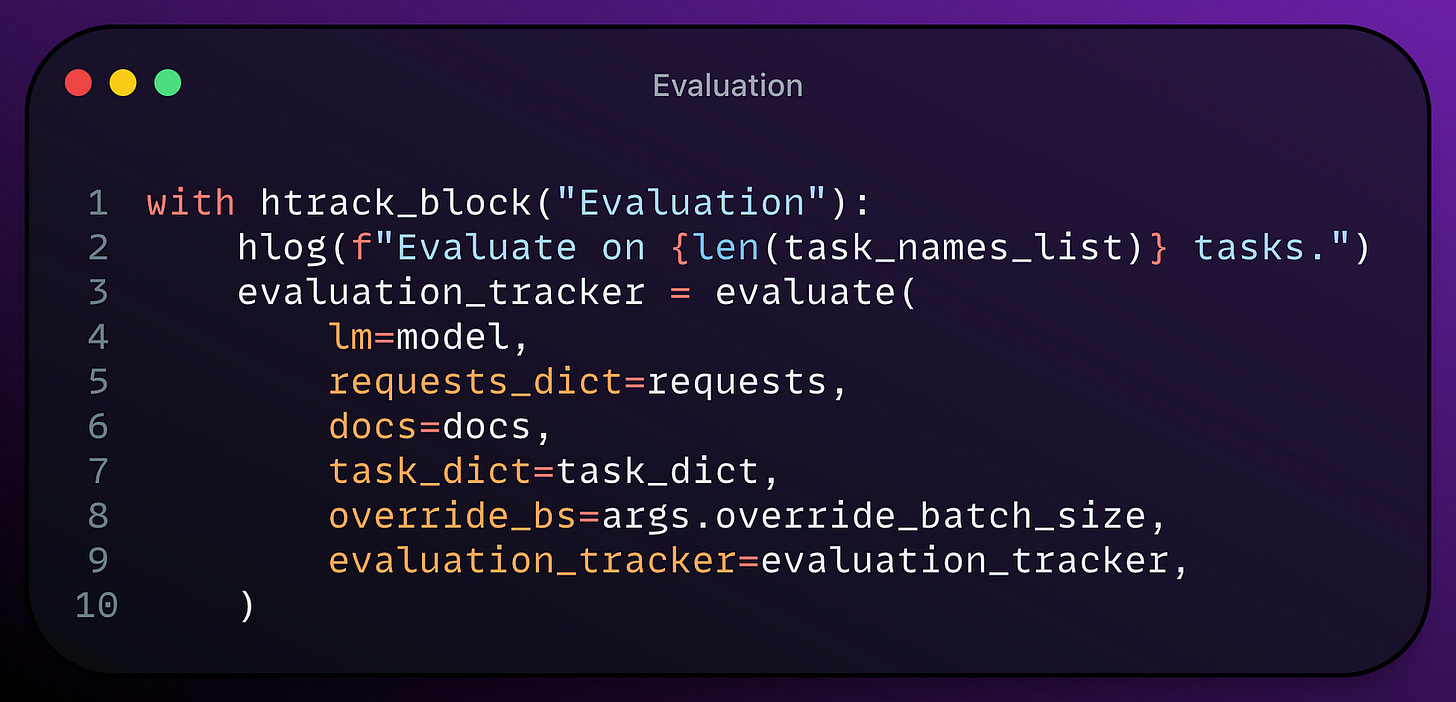

4. Evaluation (Evaluate the model on the tasks):

This step performs the evaluation on the tasks.

It uses the loaded model, data and task information to compute the metrics.

5. Result Processing (Compile and save the results):

This step aggregates the results from the evaluation.

It computes additional statistics and stores the results.

Finally, it cleans up the temporary files and prints the results.

How You Can Benchmark any LLM in Python.

Installation

Clone the repo:

git clone https://github.com/huggingface/lighteval.git

cd lightevalCreate a virtual environment using virtualenv or conda depending on your preferences. We require Python 3.10 or above:

conda create -n lighteval python=3.10 && conda activate lighteval

pip install Usage

We provide two main entry points to evaluate models:

run_evals_accelerate.py: evaluate models on CPU or one or more GPUs using 🤗 Accelerate.run_evals_nanotron.py: evaluate models in distributed settings using ⚡️ Nanotron.

For most users, I recommend using the 🤗 Accelerate backend.

Evaluate a Model on 1 or more GPUs

To evaluate a model on one or more GPUs, first create a multi-gpu config by running:

accelerate configYou can then evaluate a model using data parallelism as follows:

accelerate launch --multi_gpu --num_processes=<num_gpus> run_evals_accelerate.py \

--model_args="pretrained=<path to model on the hub>" \

--tasks <task parameters> \

--output_dir output_dirHere, --tasks refers to either a comma-separated list of supported tasks from the metadata table in the format:

suite|task|num_few_shot|{0 or 1 to automatically reduce `num_few_shot` if prompt is too long}Spin Up Inference Endpoints

If you want to evaluate a model by spinning up inference endpoints, use adapter/delta weights, or more complex configuration options, you can load models using a configuration file. This is done as follows:

accelerate launch --multi_gpu --num_processes=<num_gpus> run_evals_accelerate.py \

--model_config_path="<path to your model configuration>" \

--tasks <task parameters> \

--output_dir output_dirEvaluate a model on extended, community, or custom tasks.

Independently of the default tasks provided in lighteval that you will find in the tasks_table.jsonl file, you can use lighteval to evaluate models on tasks that require special processing (or have been added by the community). These tasks have their own evaluation suites and are defined as follows:

extended: tasks that have complex pre- or post-processing and are added by thelightevalmaintainers. See theextendedfolder for examples.community: tasks that have been added by the community. See thecommunity_tasksfolder for examples.custom: tasks that are defined locally and not present in the core library. Use this suite if you want to experiment with designing a special metric or task.

For example, to run an extended task like ifeval, you can run:

python run_evals_accelerate.py \

--model_args "pretrained=HuggingFaceH4/zephyr-7b-beta" \

--use_chat_template \ # optional, if you want to run the evaluation with the chat template

--tasks "extended|ifeval|0|0" \

--output_dir "./evals"Build an AWS Sagemaker App with LightEval

P1: Setup Environment

!pip install sagemaker --upgrade --quiet

If you are going to use Sagemaker in a local environment. You need access to an IAM Role with the required permissions for Sagemaker. You can find here more about it.

P2: LightEval Evaluation Config

LightEval includes script to evaluate LLMs on common benchmarks like MMLU, Truthfulqa, IFEval, and more. Lighteval was inspired by the Eleuther AI Harness which is used to evaluate models on the Hugging Face Open LLM Leaderboard.

We are going to use Amazon SageMaker Managed Training to evaluate the model. Therefore we will leverage the script available in lighteval. The Hugging Face DLC is not having lighteval installed. This means need to provide a requirements.txt file to install the required dependencies.

First lets load the run_evals_accelerate.py script and create a requirements.txt file with the required dependencies.

In lighteval, the evaluation is done by running the run_evals_accelerate.py script. The script takes a task argument which is defined as suite|task|num_few_shot|{0 or 1 to automatically reduce num_few_shot if prompt is too long}. Alternatively, you can also provide a path to a txt file with the tasks you want to evaluate the model on, which we are going to do. This makes it easier for you to extend the evaluation to other benchmarks.

We are going to evaluate the model on the Truthfulqa benchmark with 0 few-shot examples. TruthfulQA is a benchmark designed to measure whether a language model generates truthful answers to questions, encompassing 817 questions across 38 categories including health, law, finance, and politics.

P3: Evaluate Zephyr 7B on TruthfulQA on Amazon SageMaker

In this example we are going to evaluate the HuggingFaceH4/zephyr-7b-beta on the MMLU benchmark, which is part of the Open LLM Leaderboard.

In addition to the task argument we need to define:

model_args: Hugging Face Model ID or path, defined aspretrained=HuggingFaceH4/zephyr-7b-betamodel_dtype: The model data type, defined asbfloat16,float16orfloat32output_dir: The directory where the evaluation results will be saved, e.g./opt/ml/model

Lightevals can also evaluat peft models or use chat_templates you find more about it here.

We can now start our evaluation job, with the .fit().

huggingface_estimator.fit()

After the evaluation job is finished, we can download the evaluation results from the S3 bucket. Lighteval will save the results and generations in the output_dir. The results are savedas json and include detailed information about each task and the model's performance. The results are available in the results key.

To Conclude …

Easy Benchmarking: Lighteval simplifies evaluating large language models (LLMs) on various benchmarks. It includes scripts to run evaluations on common benchmarks like MMLU, TruthfulQA, and IFEval.

Flexibility: Lighteval supports various evaluation settings. You can evaluate models on one or more GPUs, use adapter/delta weights, or define custom tasks.

Hugging Face Integration: Lighteval integrates well with the Hugging Face ecosystem. It works with the Hugging Face Open LLM Leaderboard and is compatible with models from the Hugging Face Hub.

Enjoyed This Story?

Thanks for getting to the end of this article. My name is Tim, I work at the intersection of AI, business, and biology. I love to elaborate ML concepts or write about business(VC or micro)! Get in touch!

Subscribe for free to get notified when I publish a new story.

Get an email whenever Tim Cvetko publishes.

Get an email whenever Tim Cvetko publishes. By signing up, you will create a Medium account if you don't already have…timc102.medium.com